Unlocking Security Data: The Missing Piece in the Modern Data Stack

In the realm of data analytics, the “modern data stack” has revolutionized how businesses gather and consolidate data, facilitating informed decision-making. However, while these advances have enhanced various departments, they’ve left a crucial aspect untouched: security data.

What is ETL?

Before we dive deeper, let’s understand the term “ETL.” ETL stands for Extract, Transform, Load. It refers to the process of extracting data from various sources, transforming (aka normalizing) it into a usable format, and loading it into a destination where it can be analyzed. ETL is the backbone of data integration, allowing organizations to harmonize diverse data into a unified structure.

The Modern Data Stack’s Blind Spot

The challenge of security data is more critical than ever before. Businesses now rely on comprehensive security metrics to make strategic decisions, and every stakeholder, including the Board of Directors and C-level executives, is accountable for security. Yet, creating these metrics is a painstaking, manual, and exorbitantly expensive process, and traditional analytics tools don’t cut it.

The Challenge of Security Data

Security data differs significantly from typical analytics data. While the latter is often stateless (like a user clicking on an ad), security data is decidedly stateful. Think about a virtual machine whose network configuration evolves or a vulnerability that gets patched. The ever-changing nature of security makes it challenging to measure its effectiveness and ROI accurately.

Moreover, many security vendor APIs are notorious for their complexity. But can we blame them? Their primary goal is to provide value to security teams, not to make data extraction a breeze.

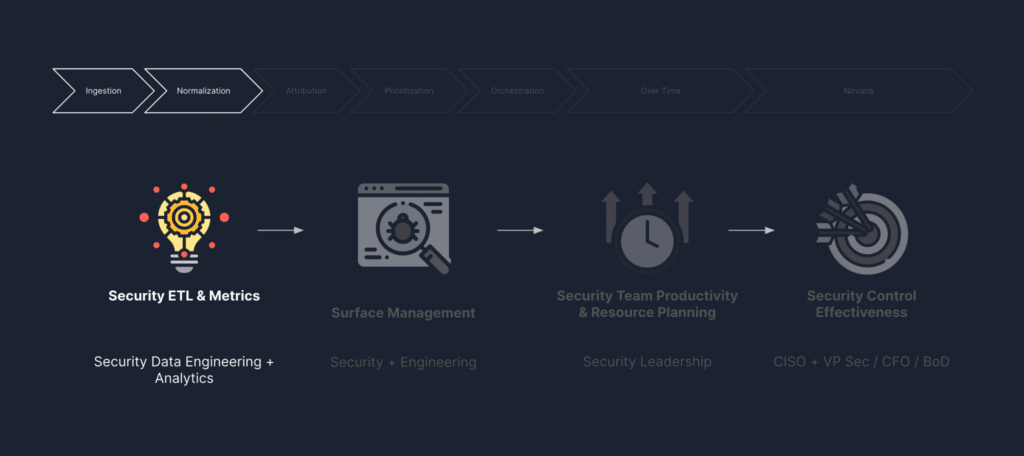

Dassana’s Answer: Security ETL

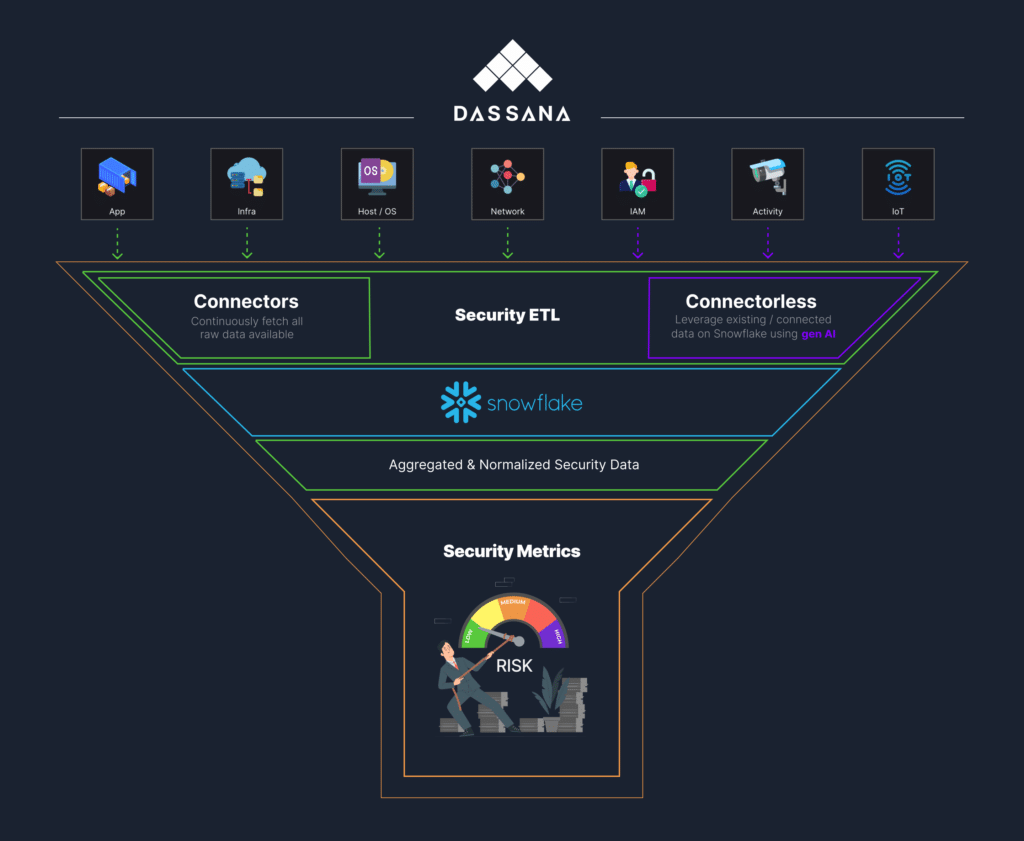

That’s where Dassana comes in. We’ve developed a specialized security ETL platform designed to alleviate the struggles faced by security teams. Our approach combines traditional connectors with a groundbreaking connectorless method. The result? A comprehensive solution that aggregates high-quality data and insights from all your security tools, creating what we call the Security Data Lake.

Connectors: Reliable Data Infrastructure

From a survey conducted among our initial customers, we’ve found that on average, enterprises are spending 175k (labor, infra costs, etc.) to build a security ETL pipeline delivering metrics. Not to mention, an ongoing cost of 95k per year to maintain these pipelines. When these pipelines go down, or drop records, data quality becomes a massive issue: the riskiest issues are the ones you have no visibility into. Fortunately, Dassana’s ETL connectors abstract this process away, delivering cost-savings and reliability guarantees to the enterprise.

Dassana has architected a schemaless ingestion engine, which enables us to pull all raw data from security tool APIs, rather than cherry-picking and indexing fields. Access to raw data provides high fidelity, ensuring all your metrics and reporting use cases are supported. We’ve also taken great care to understand the unique quirks of each vendor’s API, building battle-tested pipelines that deliver reliable datasets. Once the data is loaded in Snowflake, normalization is applied utilizing Snowflake Stored Procedures. Raw data is preserved, while the inserted normalized fields make global analytics painless across all your tools and environments.

Connectorless: Leveraging Existing Dataflow

Recently, we’ve developed our Connectorless capability to leverage datasets already residing in your Security Data Lake. These datasets are often delivered through Snowflake Partner Integrations (ex. Wiz, Lacework, etc.), and have tons of value. Rather than create redundant data ingestions and unnecessary API exposure, Dassana can discover existing data and normalize it in place. For sources where schemas aren’t well-defined, Dassana infers normalization mappings by applying its Connectorless Generative AI model. These generated mappings are validated by heuristics such as understanding a value that starts with “i-” followed by 8 or 17 characters is likely an EC2 instance ID. By automatically discovering and normalizing data in your Security Data Lake, Dassana’s custom dashboarding can provide metrics and reports immediately after deployment.

Deployment Trends: Hybrid ETL

We’re starting to see security teams adopt a hybrid model of bringing in data via Connectors and Connectorless. For sources where data quality and reliability are of utmost importance (e.g. CWPP), organizations opt to use a Dassana Connector, while leveraging their supplementary vulnerability scans and DAST data sets through our Connectorless model. That way, they have a rock-solid foundation to describe their environment, with the flexibility to add context from their point-solution tooling.

Stay Tuned for Vulnerability and Attack Surface Management Use Cases

So, why should you care about Dassana’s Security ETL? Because it simplifies, streamlines, automates, and empowers your security data analysis. In our upcoming blog posts, we’ll delve into unlocking vulnerability and attack surface management by harnessing the advantages of aggregating and normalizing data in your Security Data Lake through Dassana’s Security ETL.